Sensor Fusion, Autonomy, and Control

Using data collection, analysis, and fusion for robotic learning and decision-making.

Scroll ↓

Sensor Fusion for Simultaneous Localization and Mapping (SLAM)

For my capstone project for Mechanical Engineering at Notre Dame, my team and I were tasked with building a hovercraft that could transport a weight of 3 lbs autonomously across an unknown path given on the trial date. I was responsible for both localization and control of the hovercraft.

For localization, we used the SLAM toolbox from Nav2 in ROS2. We fused optical flow and IMU sensor data to provide an odometry estimate, which we further fused with LiDAR sensor data to localize the robot with the SLAM algorithm. We further numerically differentiated the position data for velocity and acceleration.

For control, we used a tri-directional PID controller that could command the hovercraft thrust fans to provide instantaneous forces and moments on the hovercraft in any direction. We updated the thrust of the fans based on the offset of the coordinate system of the hovercraft to the global coordinate system.

The results of the hovercraft were very strong. The hovercraft was able to localize itself within 10 cm of its true localization in most environments and in most situations the estimated location was far closer to the true location. In terms of control, the hovercraft was able to follow any path that it was given within a 1/3 meter of the desired waypoints.

More information at the project can be found here.

Final hovercraft design. The LiDAR is on top of the hovercraft. The IMU and optical flow sensor are not visible. 6 thrust fans line the exterior of the hovercraft.

Hovercraft (HDS) estimated path compared to given path created by 25 waypoints (“IRISH” in cursive).

SLAM mapping of a hallway environment.

SLAM mapping of a large atrium for final demonstration.

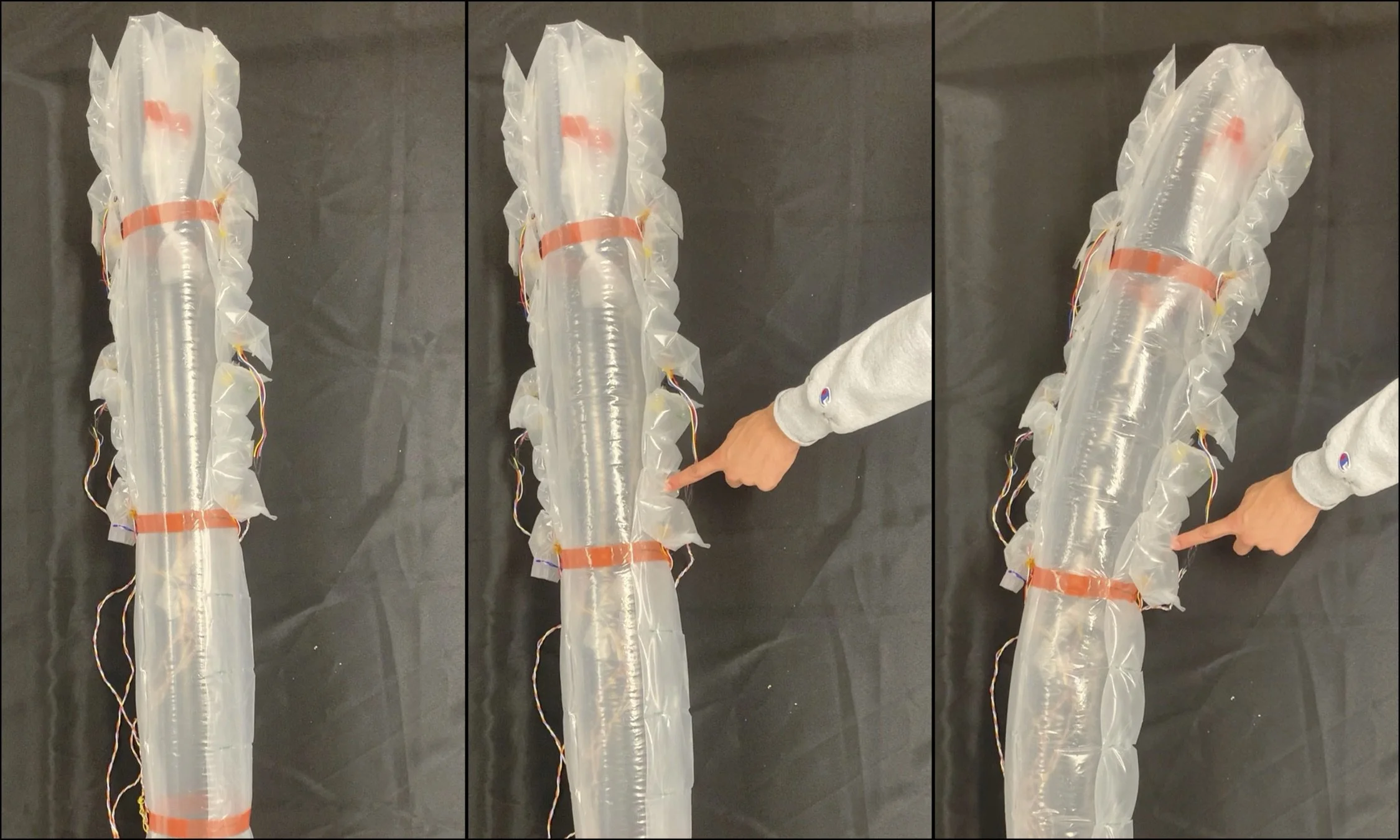

Soft Air Pocket Force Sensors for Large Scale Flexible Robots

Flexible robots have advantages over rigid robots in their ability to conform physically to their environment and to form a wide variety of shapes. Sensing the force applied by or to flexible robots is useful for both navigation and manipulation tasks, but it is challenging due to the need for the sensors to withstand the robots' shape change without encumbering their functionality. Also, for robots with long or large bodies, the number of sensors required to cover the entire surface area of the robot body can be prohibitive due to high cost and complexity.

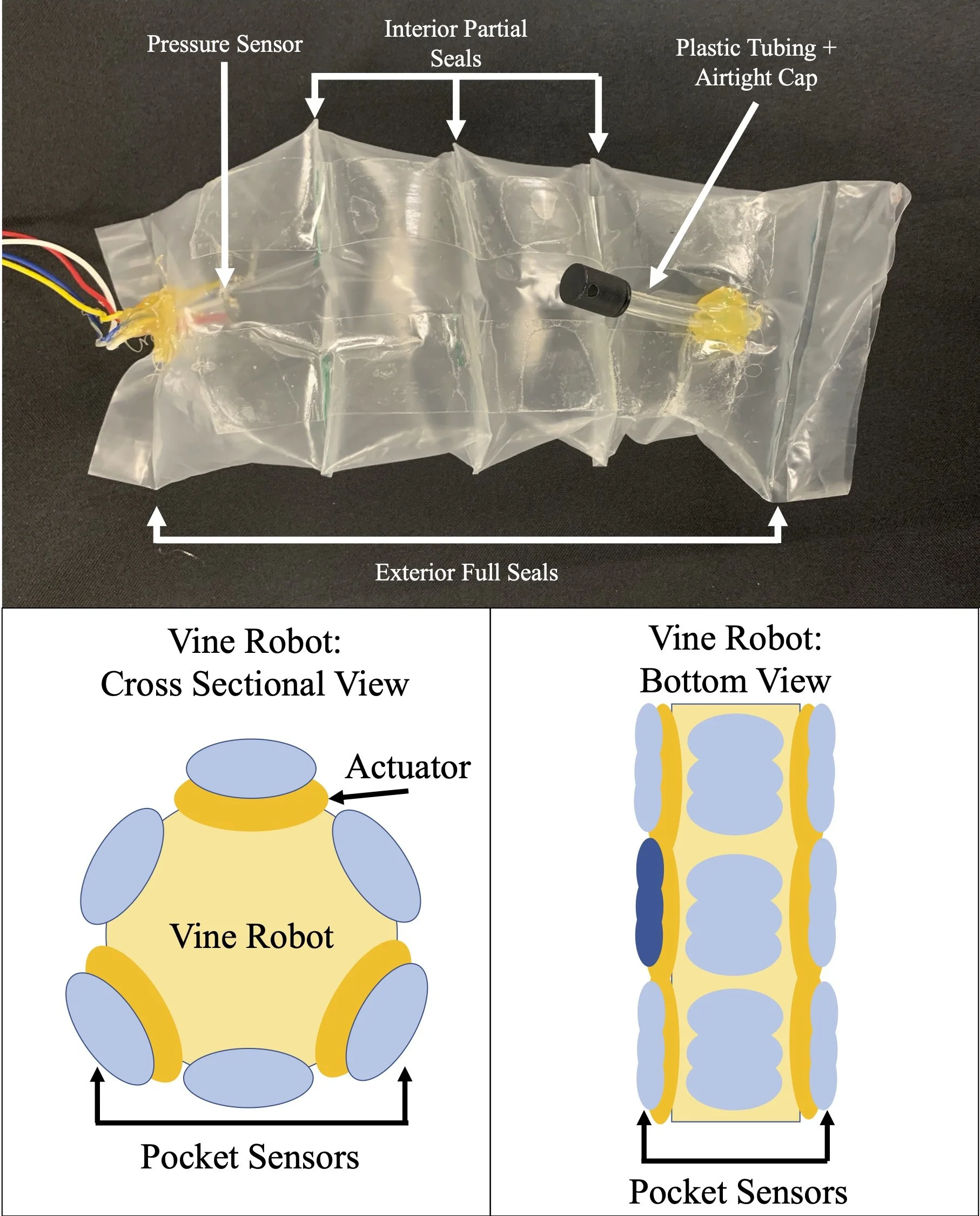

To meet this challenge, I designed a novel soft air pocket force sensor that is highly flexible, lightweight, relatively inexpensive, and easily scalable. There are a sizable number of factors that could influence the internal pressure of the sensor, including but not limited to the applied force. Using initial testing, I decided to collect data on force applied, vine robot pressure, actuator pressure, the initial pressure of the pocket sensor, and force contact area, as I found they were potential influences on the change in internal pressure of the pocket sensor.

I furthermore decided that attempting to analytically model any relationship between these factors was nearly impossible, and instead ran several tests to collect enough data to use machine learning techniques to empirically model a relationship between the dependent variables and the change in internal pressure.

More information about the initial stages of the project can be found here. Future work into the empirical modeling is in progress.

Vine robot responding to outside force applied to the soft pocket sensor.

Design of soft pocket sensor to limit effect of contact area while still maintaining the flexibility to evert and bend with a vine robot.